Robots.txt

Also Known As robots exclusion protocol or robots exclusion standard, is a text file at the root of your site with a set of standard rules that direct web crawlers on what pages to access and the ones to exclude.

It’s designed to work with search engines, but surprisingly, it’s a source of SEO juice just waiting to be unlocked. In this article, you will learn everything about this teeny tiny text file including how to create a robots.txt file for SEO purposes.

It is important to note that a robots.txt file is not a HTML file and it has specific limitations and cannot entirely absolutely direct web crawlers since:

- Robots.txt instructions are directives only

- Different crawlers interpret syntax differently

- A robotted page can still be indexed if linked to from other sites

Web robots AKA Web Wanderers, Crawlers, or Spiders, are programs that traverse the Web automatically scouting for data. Major search engines like Google use them to index web pages content; spammers use them to scan for email addresses, and many other uses of web robots beyond the scope of this article.

You can use any simple text editor like Notepad (for windows), to create a robots.txt file. The text editor should be able to create standard ASCII or UTF-8 text files. However be advised to not use a word processor since word processors often save files in a proprietary format hence can add unexpected characters such as curly quotes, which may cause problems for searchrobots during crawling.

What is robots.txt used for?

Apart from the obvious reason of blocking web robots, its definitive use is:

1. Web Pages

For web pages, robots.txt should only be used to control crawling traffic, mainly because you don’t want your server to be overwhelmed by search engine crawlers or to waste crawl budget crawling unimportant or similar pages on your website. You should not use robots.txt as a means to hide your web pages from Google Search results because other pages might point to your page, and your page could get indexed that way, avoiding the robots.txt file. If you want to block your page from search results, try another method such as password protection, noindex tags or web directives.

2. Resource files

You can also use robots.txt to block certain resource files like unimportant images, scripts, or style files, if you think that pages loaded without such resources will not be significantly affected by the loss. Nonetheless, if the absence of these resources make a page harder to understand for search engine robots, then is not good to block them, or else the pages will be poorly analyzed by web crawlers.

3. Image files

robots.txt does prevent image files from appearing in Google search results. (However it does not prevent other pages or users from linking to your image.)

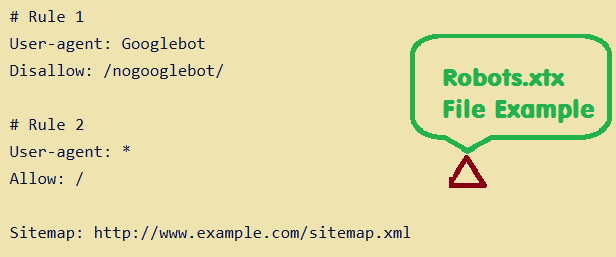

A Simple robots.txt Example

A robots.txt file consists of one or more rules. Each rule blocks or allows access for a given webcrawler to a specified file path in a site.

Below is a robots.txt file example with two standard rules:

# Rule 1 User-agent: Googlebot Disallow: /nogooglebot/ # Rule 2 User-agent: * Allow: / Sitemap: http://www.example.com/sitemap.xml

Robots.txt Example Explained:

- From the user agent named “Googlebot”, Google are given directed to not crawl the folder

http://example.com/nogooglebot/or any other subdirectories. - Rule 2, on the other hand gives instruction for searchbots/other user agents to access the entire site. (This could have been omitted and the result would be the same, as full access is the assumption.)

- The third part tells web crawlers that a site’s Sitemap is located at

http://www.example.com/sitemap.xml. This is not a mandatory part of the robots.txt file as the it can be ignored.

There is a more detailed example below.

The Basic robots.txt Guidelines

Here we are going to see some of the basic guidelines regarding robots.txt files. It is recommended for you to know the complete syntax of robots.txt files as the robots.txt syntax behaves differently with different environments.

Robots.txt Format Rules & File Location

Below are some strict format rules you need to adhere to while creating a robots.txt file for SEO(Search Engines Optimization). Here we go:

- You MUST name the file as robots.txt

- Your website should have only one robots.txt file.

- The robots.txt file must be located at the root of the website host that it applies to. For instance, to control crawling on all URLs below

http://www.example.com/, the robots.txt file must be located athttp://www.example.com/robots.txt. It cannot be placed in a subdirectory ( for instance,http://example.com/pages/robots.txt). If you’re unsure about how to access your website root or maybe need permissions to do so, then contact your web hosting company. - A robots.txt file can apply to subdomains (like,

http://blog.example.com/robots.txt) or on any non-standard port (like,http://example.com:2901/robots.txt). - Comments are just any lines.

The Basic Syntax of a Robots.txt File

Here’s the basic syntax of the robots.txt file:

- robots.txt must be an ASCII or UTF-8 text file. No other characters are permitted.

- A robots.txt file consists of one or more rules.

- Each rule consists of multiple directives (instructions), one directive per line.

- A rule gives the following information:

- Who the rule applies to (the user agent)

- Which directories or files that agent can access, and/or

- Which directories or files that agent cannot access.

- Rules are processed from top to bottom, and a user agent can match only one rule set, which is the first, most-specific rule that matches a given user agent.

- The default assumption is that a user agent can crawl any page or directory not blocked by a

Disallow:rule. - Rules are case-sensitive. For example,

Disallow: /page.htmlapplies tohttp://www.example.com/page.htmland nothttp://www.example.com/FILE.html.

The following directives are used in robots.txt files:

User-agent:[Required, one or more per rule] The name of a search engine robot (web crawler software) that the rule applies to. This is the first line for any rule. Most user-agent names are listed in the Web Robots Database or in the Google list of user agents. Supports the * wildcard for a path prefix, suffix, or entire string. Using an asterisk (*) as in the example below will match all crawlers except the various AdsBot crawlers, which must be named explicitly. (See the list of Google crawler names.) Examples:# Example 1: Block only Googlebot User-agent: Googlebot Disallow: / # Example 2: Block Googlebot and Adsbot User-agent: Googlebot User-agent: AdsBot-Google Disallow: / # Example 3: Block all but AdsBot crawlers User-agent: * Disallow: /

Disallow:[At least one or more Disallow or Allow entries per rule] A directory or page, relative to the root domain, that should not be crawled by the user agent. If a page, it should be the full page name as shown in the browser; if a directory, it should end in a / mark. Supports the * wildcard for a path prefix, suffix, or entire string.Allow:[At least one or more Disallow or Allow entries per rule] A directory or page, relative to the root domain, that should be crawled by the user agent just mentioned. This is used to override Disallow to allow crawling of a subdirectory or page in a disallowed directory. If a page, it should be the full page name as shown in the browser; if a directory, it should end in a / mark. Supports the * wildcard for a path prefix, suffix, or entire string.Sitemap:[Optional, zero or more per file] The location of a sitemap for this website. Must be a fully-qualified URL; Google doesn’t assume or check http/https/www.non-www alternates. Sitemaps are a good way to indicate which content Google should crawl, as opposed to which content it can or cannot crawl. Example:Sitemap: https://example.com/sitemap.xml Sitemap: http://www.example.com/sitemap.xml

Unknown keywords are ignored.

Another example file

A robots.txt file consists of one or more blocks of rules, each beginning with a User-agent line that specifies the target of the rules. Here is a file with two rules; inline comments explain each rule:

# Block googlebot from example.com/directory1/... and example.com/directory2/... # but allow access to directory2/subdirectory1/... # All other directories on the site are allowed by default. User-agent: googlebot Disallow: /directory1/ Disallow: /directory2/ Allow: /directory2/subdirectory1/ # Block the entire site from anothercrawler. User-agent: anothercrawler Disallow: /

Useful robots.txt rules

Here are some common useful robots.txt rules:

| Rule | Sample |

|---|---|

| Disallow crawling of the entire website. Keep in mind that in some situations URLs from the website may still be indexed, even if they haven’t been crawled. Note: this does not match the various AdsBot crawlers which must be named explicitly. | User-agent: * Disallow: / |

| Disallow crawling of a directory and its contents by following the directory name with a forward slash. Remember that you shouldn’t use robots.txt to block access to private content: use proper authentication instead. URLs disallowed by the robots.txt file might still be indexed without being crawled, and the robots.txt file can be viewed by anyone, potentially disclosing the location of your private content. | User-agent: * Disallow: /calendar/ Disallow: /junk/ |

| Allow access to a single crawler | User-agent: Googlebot-news Allow: / User-agent: * Disallow: / |

| Allow access to all but a single crawler | User-agent: Unnecessarybot Disallow: / User-agent: * Allow: / |

Disallow crawling of a single webpage by listing the page after the slash: | Disallow: /private_file.html |

Block a specific image from Google Images: | User-agent: Googlebot-Image Disallow: /images/dogs.jpg |

Block all images on your site from Google Images: | User-agent: Googlebot-Image Disallow: / |

Disallow crawling of files of a specific file type (for example, | User-agent: Googlebot Disallow: /*.gif$ |

Disallow crawling of entire site, but show AdSense ads on those pages, disallow all web crawlers other than Mediapartners-Google. This implementation hides your pages from search results, but the Mediapartners-Google web crawler can still analyze them to decide what ads to show visitors to your site. | User-agent: * Disallow: / User-agent: Mediapartners-Google Allow: / |

Match URLs that end with a specific string, use $. For instance, the sample code blocks any URLs that end with .xls: | User-agent: Googlebot Disallow: /*.xls$ |

Full robots.txt syntax

You can find the full robots.txt syntax here. Please read the full documentation, as the robots.txt syntax has a few tricky parts that are important to learn.

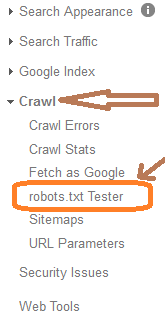

Testing your robots.txt file

You can submit your robots URL (http://example.com/robot.txt) to the Google Webmaster tools robots.txt Tester section to test your robots.txt . It is the most effective tool online. The tool operates in the same way as web robots like Googlebots would. To test your robots.txt file, follow these steps:

- Login to your Google Webmaster tools account, and select a site to manage.

- Then navigate to the robots.txt tester tool clicking on “crawl” then “robots.txt tester”.

- Open the tester tool for your site, and scroll through the

robots.txtcode to locate the highlighted syntax warnings and logic errors. The number of syntax warnings and logic errors is shown immediately below the editor. - Type in the URL of a page on your site in the text box at the bottom of the page.

- Then select the user-agent you wish to simulate in the dropdown list to the right of the text box.

- And click the TEST button to test access.

- Check to see if TEST button now reads ACCEPTED or BLOCKED to find out if the robots.txt URL you entered is blocked from web robots.

- Finally, edit the file on the page and save. It is vital to note that changes made in the page are not saved to your site! So as you do that, also save the changes to the robots.txt file on your website as this tool does not make changes to the actual file on your site, it only tests against it to see if it works according to your wishes.

If Google cannot find your robots.txt file, it returns an error like one below;

Before Googlebot crawls a site, it accesses its robots.txt file to determine if the website is blocking Google from crawling any pages or URLs. If a robots.txt file exists but is unreachable (in other words, if it doesn’t return a 200 or 404 HTTP status code), Google says it will postpone the crawl rather than risk crawling URLs that you do not wish crawled. When that happens, Googlebots will return to your site and crawl it as soon as accessing your robots.txt file is possible.

Limitations of the robots.txt Tester tool:

- The robots.txt Tester tool only tests your

robots.txtwith Google user-agents or web crawlers, like Googlebot. It cannot predict how other webbots interpret yourrobots.txtfile. - When you make canges with the editor, they cannot be automatically saved to your web server. You need to do it manually on your webserver.

How to Create robots.txt Files in WordPress

WordPress is the world’s most popular CMS (Content Management System) and early every blog uses it. It is a great tool for an organisation point of view. However, this resourceful toolskit most of the time comes with pre-set directives. In WordPress for instance, your robots.txt file is pre-installed for your site. And since most WordPress users are not so much into the backend coding of sites (they mostly customize themes), this can feel tricky but it’s actually not. Below we will see how to create a robots.txt file in WordPress.

Since we said above that a robots.txt file can only be at the root directory of a site, to locate yours just go to http://YourSite.com/robots.txt. Then follow the following steps to create a robots.txt file in WordPress:

- Open a text editor like Notepad on your computer

- Save your file as robots.txt

- Write your robots.txt rules on your file and save it.

- When done, login to your web hosting account “file manager” and upload your robots.txt file to your root directory (/) commonly at your “Public HTML” folder.

- Overwrite the existing robots.txt file if it exists to replace it with your new one.

- Finally, Open Google webmaster tools and go to “robots.txt tester” under “crawl” section and test your robots.txt file

- If you find errors or something does not work, fix it by using the live preview at this Google robots.txt tester.

- Then finally download your completed robots.txt file and follow the steps above to upload it to your website root directory.

And that simply how you edit or create a robots.txt file in WordPress, just like a normal web file.

General Robots Questions

Does my website need a robots.txt file?

Nope. When searchbots visit a site, they first ask for permission to crawl by attempting to retrieve the robots.txt file. A website without a robots.txt file, robots Meta tags or X-Robots-Tag HTTP headers will generally be crawled and indexed normally.

Which method should I use?

It depends. In short, there are good reasons to use each of these methods:

- robots.txt: Use it if crawling of your content is causing issues on your server. For example, you may want to disallow crawling of infinite calendar scripts. You should not use the robots.txt to block private content (use server-side authentication instead), or handle canonicalization. If you must be certain that a URL is not indexed, use the robots Meta tag or X-Robots-Tag HTTP header instead.

- robots Meta tag: Use it if you need to control how an individual HTML page is shown in search results (or to make sure that it’s not shown).

- X-Robots-Tag HTTP header: Use it if you need to control how non-HTML content is shown in search results (or to make sure that it’s not shown).

Can I use these methods to remove someone else’s site?

Absolutely NO. These methods are only valid for sites where you can modify the code or add files. If you want to remove content from a third-party site, you need to contact the webmaster to have them remove the content.

Robots.txt questions

I use the same robots.txt for multiple websites. Can I use a full URL instead of a relative path?

No. The directives in the robots.txt file (with exception of “Sitemap:”) are only valid for relative paths.

Can I place the robots.txt file in a subdirectory?

No. The file must be placed in the topmost directory of the website.

I want to block a private folder. Can I prevent other people from reading my robots.txt file?

No. The robots.txt file may be read by various users. If folders or filenames of content should not be public, they should not be listed in the robots.txt file. It is not recommended to serve different robots.txt files based on the user-agent or other attributes.

Do I have to include an allow directive to allow crawling?

No, you do not need to include an allow directive. The allow directive is used to override disallow directives in the same robots.txt file.

What happens if I have a mistake in my robots.txt file or use an unsupported directive?

Web-crawlers are generally very flexible and typically will not be swayed by minor mistakes in the robots.txt file. In general, the worst that can happen is that incorrect / unsupported directives will be ignored. Bear in mind though that Google can’t read minds when interpreting a robots.txt file; we have to interpret the robots.txt file we fetched. That said, if you are aware of problems in your robots.txt file, they’re usually easy to fix.

What program should I use to create a robots.txt file?

You can use anything that creates a valid text file. Common programs used to create robots.txt files are Notepad, TextEdit, vi, or emacs. Read more about creating robots.txt files. After creating your file, validate it using the robots.txt tester.

If I block Google from crawling a page using a robots.txt disallow directive, will it disappear from search results?

Blocking Google from crawling a page is likely to decrease that page’s ranking or cause it to drop out altogether over time. It may also reduce the amount of detail provided to users in the text below the search result. This is because without the page’s content, the search engine has much less information to work with.

However, robots.txt Disallow does not guarantee that a page will not appear in results: Google may still decide, based on external information such as incoming links, that it is relevant. If you wish to explicitly block a page from being indexed, you should instead use the noindex robots Meta tag or X-Robots-Tag HTTP header. In this case, you should not disallow the page in robots.txt, because the page must be crawled in order for the tag to be seen and obeyed.

How long will it take for changes in my robots.txt file to affect my search results?

First, the cache of the robots.txt file must be refreshed (we generally cache the contents for up to one day). Even after finding the change, crawling and indexing is a complicated process that can sometimes take quite some time for individual URLs, so it’s impossible to give an exact timeline. Also, keep in mind that even if your robots.txt file is disallowing access to a URL, that URL may remain visible in search results despite that fact that we can’t crawl it. If you wish to expedite removal of the pages you’ve blocked from Google, please submit a removal request via Google Search Console.

How do I specify AJAX-crawling URLs in the robots.txt file?

You must use the crawled URLs when specifying URLs that use the AJAX-crawling proposal.

How can I temporarily suspend all crawling of my website?

You can temporarily suspend all crawling by returning a HTTP result code of 503 for all URLs, including the robots.txt file. The robots.txt file will be retried periodically until it can be accessed again. We do not recommend changing your robots.txt file to disallow crawling.

My server is not case-sensitive. How can I disallow crawling of some folders completely?

Directives in the robots.txt file are case-sensitive. In this case, it is recommended to make sure that only one version of the URL is indexed using canonicalization methods. Doing this allows you to simplify your robots.txt file. Should this not be possible, we recommended that you list the common combinations of the folder name, or to shorten it as much as possible, using only the first few characters instead of the full name. For instance, instead of listing all upper and lower-case permutations of “/MyPrivateFolder”, you could list the permutations of “/MyP” (if you are certain that no other, crawlable URLs exist with those first characters). Alternately, it may make sense to use a robots Meta tag or X-Robots-Tag HTTP header instead, if crawling is not an issue.

I return 403 “Forbidden” for all URLs, including the robots.txt file. Why is the site still being crawled?

The HTTP result code 403—as all other 4xx HTTP result codes—is seen as a sign that the robots.txt file does not exist. Because of this, crawlers will generally assume that they can crawl all URLs of the website. In order to block crawling of the website, the robots.txt must be returned normally (with a 200 “OK” HTTP result code) with an appropriate “disallow” in it.

Robots Meta tag questions

Is the robots Meta tag a replacement for the robots.txt file?

No. The robots.txt file controls which pages are accessed. The robots Meta tag controls whether a page is indexed, but to see this tag the page needs to be crawled. If crawling a page is problematic (for example, if the page causes a high load on the server), you should use the robots.txt file. If it is only a matter of whether or not a page is shown in search results, you can use the robots Meta tag.

Can the robots Meta tag be used to block a part of a page from being indexed?

No, the robots Meta tag is a page-level setting.

Can I use the robots Meta tag outside of a <head> section?

No, the robots Meta tag currently needs to be in the <head> section of a page.

Does the robots Meta tag disallow crawling?

No. Even if the robots Meta tag currently says noindex, we’ll need to recrawl that URL occasionally to check if the Meta tag has changed.

How does the nofollow robots Meta tag compare to the rel="nofollow" link attribute?

The nofollow robots Meta tag applies to all links on a page. The rel="nofollow" link attribute only applies to specific links on a page. For more information on the rel="nofollow" link attribute, please see our Help Center articles on user-generated spam and the rel=”nofollow”.

X-Robots-Tag HTTP header questions

How can I check the X-Robots-Tag for a URL?

A simple way to view the server headers is to use a web-based server header checker or to use the “Fetch as Googlebot” feature in Google Search Console.